Future Proof Citywide 2026: The Strategic Hub for AI-Native Finance Leaders

Future Proof Citywide 2026 unites AI-native finance leaders to build, scale, and govern intelligent wealth ecosystems.

Read Story

In the ever-evolving world of big data, businesses are increasingly relying on sophisticated systems to collect, store, and analyse vast amounts of information. One of the most effective solutions for managing this data explosion is the Data Lake—a centralised repository that allows organisations to store structured, semi-structured, and unstructured data at any scale. In this blog, we will explore data lake architectures, the core design principles, and the best practices that ensure optimal performance, scalability, and data governance. Additionally, we'll touch on how enterprise data lake services, data lake solutions, and enterprise data lake consulting services can help businesses implement and optimise their data lakes.

A data lake is a storage system that holds large amounts of raw data in its native format until needed. Unlike traditional databases or data warehouses, which require data to be formatted and cleaned before storage, data lakes allow for schema-on-read. This means data is only processed and structured when it is accessed, making it highly flexible for various analytics workloads. Data lakes are a core component of many enterprise data lake solutions because they can support a wide range of analytics processes, from machine learning and predictive analytics to business intelligence and data visualisation.

Data lakes offer unlimited scalability, allowing businesses to store petabytes or even exabytes of structured, semi-structured, and unstructured data. Whether you're seeking data lake solutions in India or globally, data lakes are ideal for managing massive data volumes. As enterprises anticipate exponential data growth, they increasingly turn to enterprise data lake services to future-proof their operations.

Unlike traditional databases or data warehouses, data lakes support a wide range of data formats such as CSV, JSON, XML, Parquet, ORC, and others. This flexibility allows organisations to store raw, processed, or even semi-processed data from multiple sources, making it easier to experiment with data science and machine learning models.

Data lakes are built using cloud storage solutions, which provide pay-as-you-go pricing models, helping businesses significantly reduce upfront infrastructure costs. By partnering with a data lake consulting solution firm, organisations can move cold data to low-cost storage, further optimising operational expenses.

With data lakes, businesses can ingest and store data immediately without the need for upfront structuring or transformations. This means that data can be explored in its raw format, making data lakes particularly useful for ad-hoc queries, exploratory data analysis, and evolving data-driven decision-making.

As more organisations adopt AI and machine learning in their operations, the flexibility and scalability of data lakes make them a future-proof investment. Enterprise data lake consulting can help organisations stay competitive by supporting advanced analytics, real-time data streaming, and predictive modelling.

Bonus Points: Data lake consulting plays a crucial role in enabling AI and machine learning (ML) solutions by streamlining data management and storage. With large volumes of structured and unstructured data flowing into organizations, data lakes provide a flexible and scalable environment for data processing. This helps in faster data preparation, improving the accuracy and efficiency of AI and ML models. Leveraging data lake consulting allows businesses to optimize their data infrastructure, ensuring better decision-making and innovation in AI-driven projects. For more details, visit 👉 Unlocking AI and ML Potential Through Data Lake Consulting.

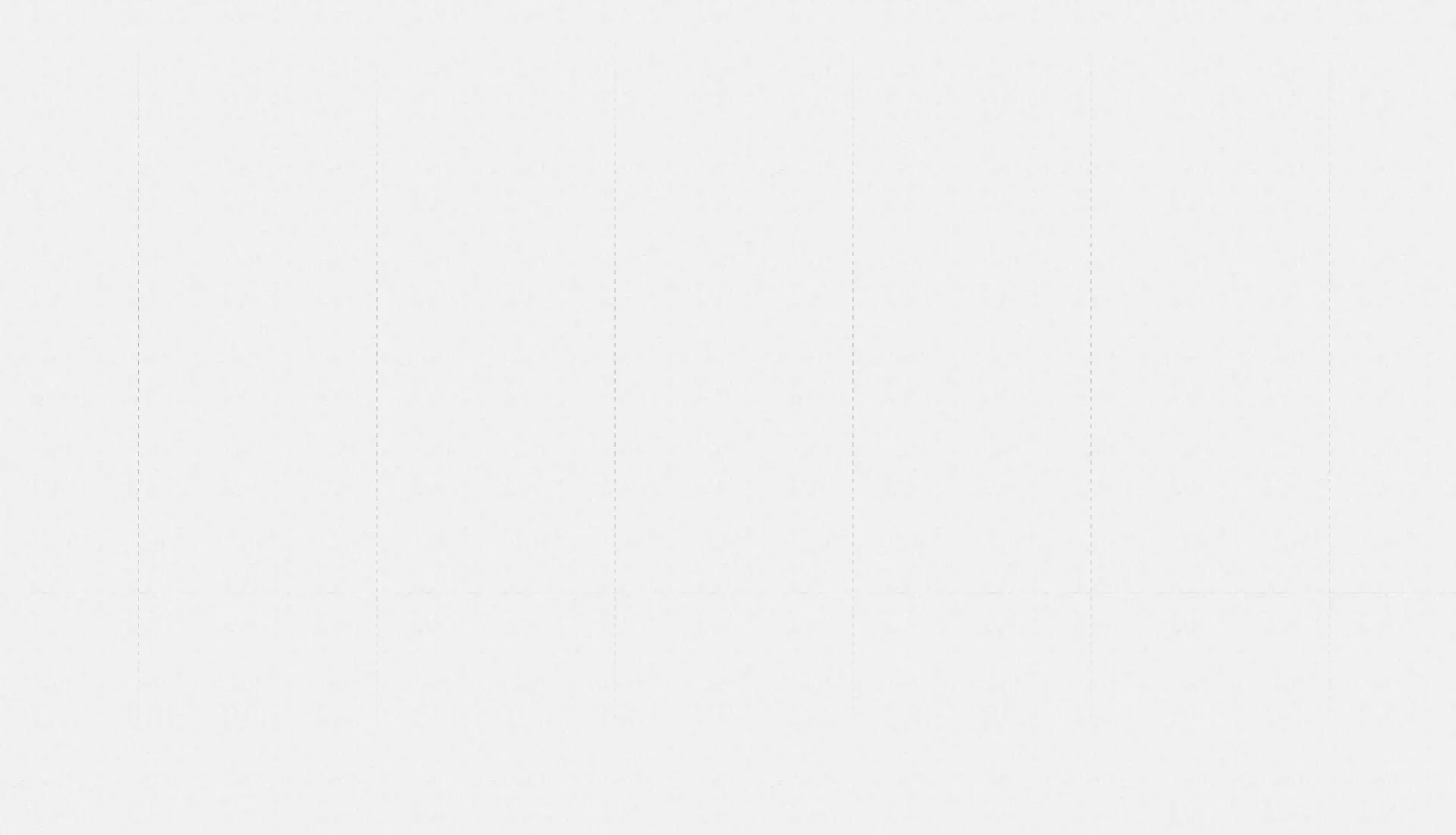

An on-premises data lake provides full control over your data storage infrastructure. This solution is commonly chosen by organisations with strict data governance, security, or compliance requirements. However, managing an on-prem data lake can be resource-intensive, requiring a significant investment in hardware, software, and IT staff to maintain and scale the system.

Cloud-based data lakes are hosted by third-party providers such as AWS, Microsoft Azure, and Google Cloud. These solutions offer high scalability, availability, and cost efficiency through a pay-as-you-go model. Cloud data lakes consulting services can help businesses implement and maintain cloud data lakes while ensuring integration with cloud-based analytics tools.

A hybrid data lake architecture combines on-premises and cloud resources, allowing businesses to enjoy the benefits of both environments. For example, sensitive data can remain on-prem for security and compliance, while less sensitive data is stored in the cloud for cost optimisation and scalability.

Multi-cloud data lake solutions leverage multiple cloud providers to store and manage data across different platforms. This approach offers businesses the flexibility to choose the best provider for specific use cases (e.g., AWS for machine learning and Google Cloud for data analytics) and to reduce risks associated with vendor dependency. It also enhances business continuity by ensuring data is stored redundantly across multiple cloud environments.

A foundational framework for building distributed data lakes, Hadoop enables businesses to store and process vast amounts of data across multiple nodes. It supports various file systems, including HDFS and S3, making it essential for enterprise data lake services that need both batch processing and real-time analytics.

AWS Lake Formation simplifies the setup of secure data lakes on AWS. This service helps businesses easily ingest, catalog, and secure data from multiple sources, streamlining the creation of a governed data lake. It also integrates well with AWS analytics tools such as AWS Glue, Redshift, and Athena. If you're using any ELT tools, than this may easily integrates with best ELT tools for AWS.

Built on Apache Spark, Databricks is a unified analytics platform that provides an integrated environment for data engineering, machine learning, and business analytics. It is widely used in data lake consulting for building collaborative data pipelines, performing real-time data analysis, and training machine learning models on big data.

Azure’s ADLS is a highly scalable data lake storage solution that integrates seamlessly with other Microsoft services such as Azure Data Factory, Power BI, and Synapse Analytics. It provides tiered storage for cost optimisation, robust security and governance features, such as RBAC and encryption, making it a key component for cloud data lake consulting services.

Google Cloud provides secure and scalable data lake storage via Cloud Storage, which is optimised for both cold and hot data workloads. Paired with tools like BigQuery for real-time analytics and TensorFlow for machine learning, Google Cloud is a popular choice for organisations leveraging advanced analytics.

To build an effective data lake, following these design principles is essential:

Ensure your data lake can ingest data from multiple sources, such as databases, sensors, applications, and streaming platforms. Batch and real-time streaming data ingestion (e.g., from Apache Kafka, is an open-source circulated streaming framework utilised for stream handling: exploring Apache Kafka and its benefits) should be supported to handle both structured and unstructured data.

Additionally, it’s crucial to ensure proper data integration pipelines that enable data from various sources to be seamlessly transformed and enriched for downstream analytics.

The storage layer forms the backbone of a data lake. It should be designed to be:

Cloud storage options such as Amazon S3, Azure Blob Storage, or Google Cloud Storage are widely used to implement cost-effective, scalable storage layers, a common recommendation by enterprise data lake solutions providers.

Data governance is a key consideration in ensuring data quality, security, and compliance in a data lake. Adopt a comprehensive data governance framework that includes:

Data lakes should support multi-modal analytics that allow for processing structured and unstructured data alike. Key technologies include:

To maintain optimal performance, partitioning and indexing data within your lake is crucial. Leverage columnar file formats like Apache Parquet or ORC to store large datasets, as these formats allow for faster query times and more efficient storage.

Implement autoscaling in cloud environments to dynamically scale storage and compute resources based on usage, ensuring the system remains performant even under high demand.

Data lakes require continuous monitoring to ensure performance and cost efficiency. Use monitoring tools like AWS CloudWatch, Azure Monitor, or open-source alternatives like Prometheus to track the health of your storage and processing layers.

Automate routine maintenance tasks such as data purging or archiving with tools like Apache Airflow or AWS Step Functions, reducing the operational overhead on your IT teams.

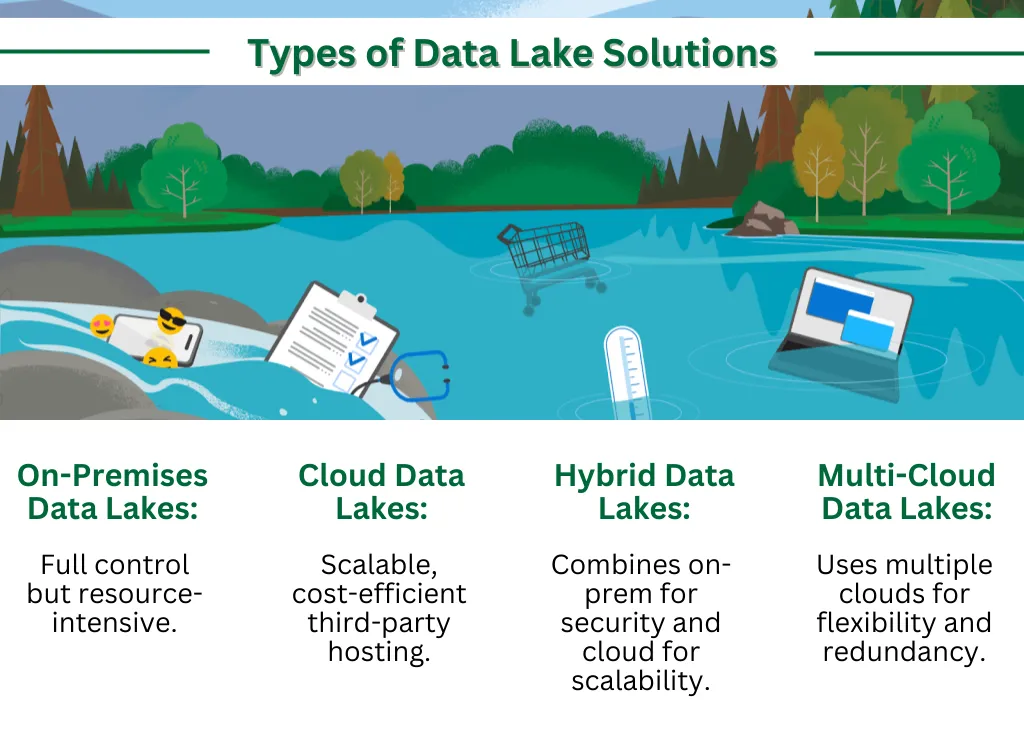

A data lake allows businesses to store large volumes of raw data at a low cost, bypassing the need for expensive ETL processes required by traditional data warehouses. Suggested by top data lake solution firms, organisations can process data on-demand, which helps reduce upfront infrastructure costs and transformation expenses.

Data lakes act as a centralised repository where all types of data can be stored—structured, semi-structured, and unstructured. This enables organisations to manage diverse data sources (e.g., CRM systems, IoT devices, social media platforms) under a single architecture, simplifying data analytics and reporting.

Data lakes are designed to support advanced analytics such as machine learning, AI, predictive modeling, and deep learning. By storing historical and real-time data together, businesses can generate insights that drive innovation, personalization, and predictive capabilities. Moreover, integrating data lakes with data visualization tools enables businesses to create interactive dashboards and visually rich reports. With the help of data visualization consulting services, organizations can customize these visualizations to better understand complex data patterns, identify trends, and enhance data-driven decision-making. This combination of advanced analytics and data visualization solutions empowers decision-makers to monitor performance, communicate insights clearly, and drive strategic actions with more confidence.

With data lakes, businesses can quickly respond to new data sources or evolving business needs without the need for rigid data preparation or ETL processes. This agility allows for faster innovation and quicker decision-making.

Also Read: Exploring Machine Learning and its 3 Pillars

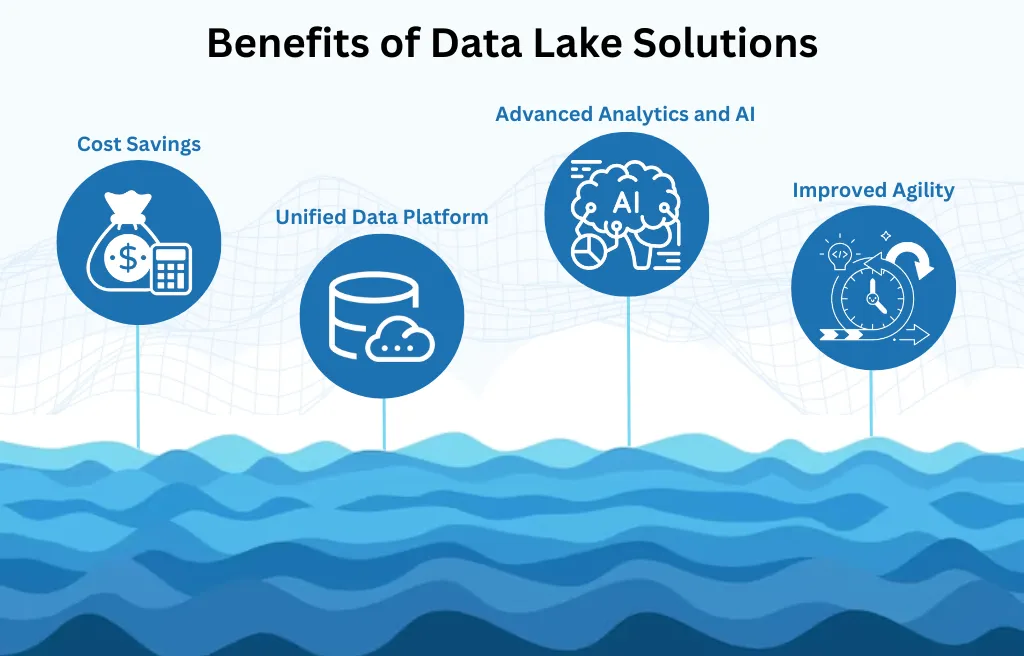

In the world of data management, three distinct architectures have emerged as prominent solutions: Data Lakes, Data Warehouses, and Data Lakehouses. Each architecture serves different purposes and is suited for different types of workloads. Below, we'll explore their differences, use cases, and how they fit into modern data strategies.

A Data Lake is a centralized repository that allows organizations to store vast amounts of raw, unstructured, semi-structured, and structured data. It can scale to handle petabytes or even exabytes of data, with the flexibility to ingest data in its native format, such as text files, logs, images, videos, and more.

A Data Warehouse is a highly structured and optimized environment designed for storing large amounts of organized, processed data, typically in a relational database format. It is part of comprehensive data warehouse services, which are ideal for running complex queries and producing business intelligence reports from structured data.

Also Read: How Data Warehouse Services Improve Data Integration Across Multiple Platforms

The Data Lakehouse is a relatively new architecture that aims to combine the best features of both data lakes and data warehouses. It allows data lake solution experts to leverage both the flexibility of data lakes (handling raw and unstructured data) and the performance and structure of data warehouses (enabling faster querying of structured data).

When designing a data lake solution, it’s crucial to consider the architecture, which can vary depending on the use case and business requirements. Here are three core architectures commonly used:

In a centralized architecture, all data from different business units or departments is stored in a single, unified data lake. This type of architecture is ideal for organisations that want to manage all their data from a single repository, making it easier to perform enterprise-wide analytics.

Organisations often rely on enterprise data lake consulting services to design centralized architectures that align with their business goals and security needs.

In a decentralized architecture, each department or business unit manages its own data lake. This approach is popular in large enterprises where different teams require autonomy over their data while still benefiting from the scalability of a data lake.

To navigate the complexity of decentralized architectures, businesses frequently leverage enterprise data lake services to ensure effective governance and seamless integration between department-specific data lakes.

Organisations adopting cloud strategies may deploy multi-cloud or hybrid data lake architectures. In this setup, a portion of the data lake is hosted on-premises while another part resides in a cloud provider. Alternatively, multiple clouds may be used depending on cost, data residency, or compliance needs.

Organisations often engage enterprise data lake consulting services to implement robust hybrid architectures and ensure seamless multi-cloud data integration.

Also Read: Data Lake Solutions: A Rich Elucidation from A to Z

Without proper governance and metadata management, data lakes can turn into data swamps, where data becomes disorganized, difficult to access, and largely unusable. To prevent this, businesses must implement metadata catalogs, data quality controls, and a robust data governance framework.

Due to the large volumes of sensitive data stored in data lakes, they are attractive targets for cyberattacks. Ensuring data is encrypted both at rest and in transit, applying role-based access controls (RBAC), and adhering to compliance regulations like GDPR and HIPAA are critical to securing your data lake.

As the volume of data grows, maintaining data quality becomes increasingly difficult. Implementing regular data validation, cleansing processes, and schema-on-read approaches can help avoid issues related to inconsistent, incomplete, or incorrect data.

Building and maintaining a data lake requires expertise in cloud architecture, data governance, and data engineering. Operational challenges include managing multiple data sources, ensuring performance optimisation, and reducing latency in query times.

Data lakes provide flexibility and scalability that enable businesses across industries to efficiently store, manage, and analyze large volumes of diverse data. Below are some prominent use cases that demonstrate how organizations can leverage data lakes to drive innovation and achieve business objectives:

Data lakes in healthcare facilitate the storage and analysis of vast datasets, including patient records, medical images, genomic data, and data from healthcare IoT devices. By integrating these diverse data sources, healthcare organisations can:

In the fast-paced world of retail, data lakes allow companies to store and analyze a wide range of data, including transactional data, customer behavior, social media interactions, and inventory data. Retailers can leverage data lakes to:

Data lakes are pivotal in the financial services sector, where businesses deal with massive datasets from multiple sources, including transactional systems, market data, social media, and customer profiles. Financial institutions use data lakes to:

Manufacturers can use data lakes to consolidate and analyze data from production lines, machine sensors, IoT devices, and supply chain systems. These data lakes support:

Also Read: Detailed comparative analysis of Data Lake vs Data Warehouse

Data lakes help media companies aggregate and analyze large amounts of content, audience data, and user interactions across multiple platforms. This allows them to:

Energy companies leverage data lakes to store and analyze data from smart meters, energy grids, and renewable energy sources. This allows them to:

Government agencies use data lakes to centralize vast amounts of data from citizen services, public safety, transportation, and emergency response systems. These agencies can:

Telecom companies use data lakes to analyze vast amounts of network data, customer usage patterns, and service performance metrics. They can:

1. Growth in Adoption:

2. Cloud Shift:

3. Investment Increase:

4. Real-Time Analytics Focus:

5. Integration with AI/ML:

1. Data Volume Growth:

2. Diverse Data Sources:

3. Improved Decision-Making:

4. Operational Efficiency:

5. Cost Reduction:

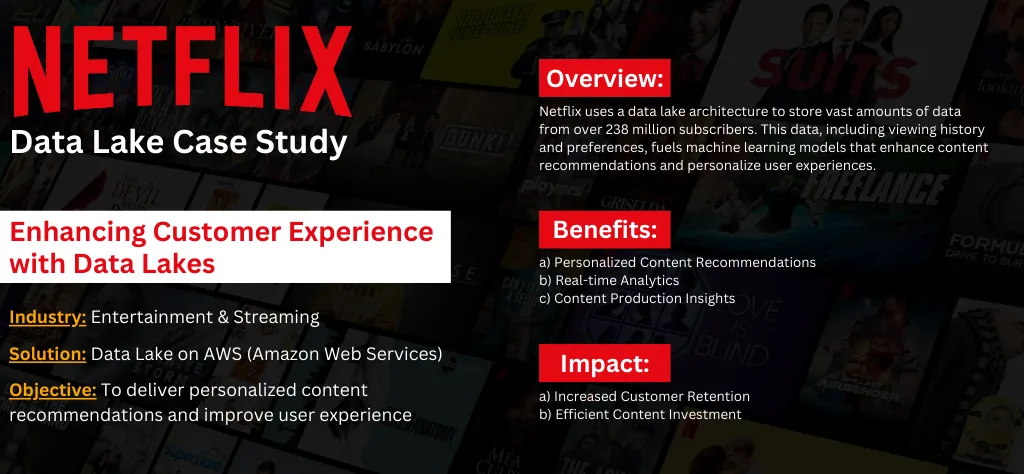

Industry: Entertainment & Streaming

Solution: Data Lake on AWS (Amazon Web Services)

Objective: To deliver personalized content recommendations and improve user experience.

Netflix uses a data lake architecture to store massive amounts of raw, unstructured, and semi-structured data generated from over 238 million global subscribers. Data lakes allow Netflix to aggregate user activity data such as viewing history, preferences, and interactions across various devices. This data is critical for their machine learning models, which are used to optimize content recommendations and create personalized viewing experiences.

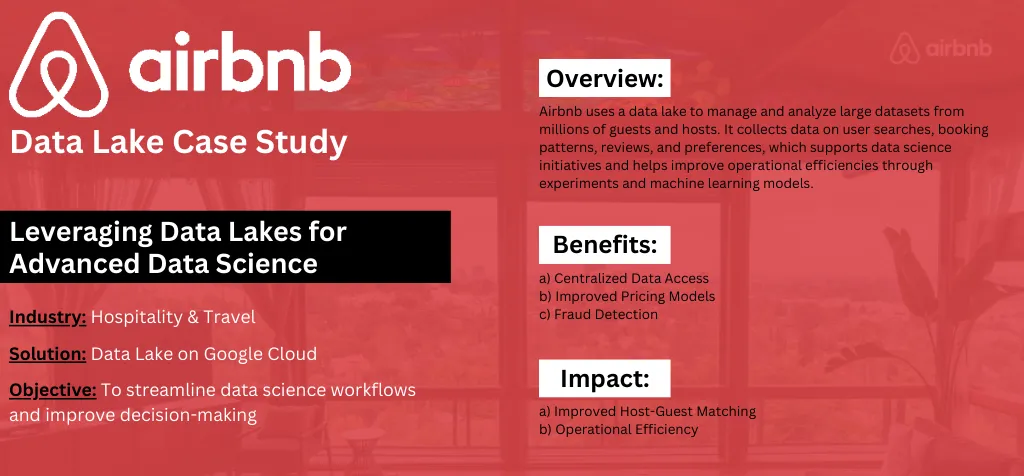

Industry: Hospitality & Travel

Solution: Data Lake on Google Cloud

Objective: To streamline data science workflows and improve decision-making.

Airbnb relies on its data lake to manage and analyze large-scale datasets generated from millions of guests and hosts around the world. Airbnb collects data on user searches, booking patterns, host reviews, and location preferences. The data lake serves as a foundation for the company’s data science initiatives, enabling teams to run experiments and deploy machine learning models that improve operational efficiencies.

Industry: Financial Services

Solution: Data Lake on AWS (Amazon Web Services)

Objective: To improve customer service, fraud detection, and operational efficiencies.

Capital One, one of the leading financial institutions in the U.S., uses a data lake to collect and store customer transaction data, financial records, and other operational data. By leveraging AWS’s scalable storage solutions, Capital One has created a unified data environment to run advanced analytics and machine learning models.

Data lakes offer an incredible opportunity for businesses to harness the power of their data. However, a poorly designed or managed data lake can quickly devolve into a costly and inefficient system. By following the right architecture, adhering to design principles, and implementing best practices, organizations can build a scalable, flexible, and efficient data lake that empowers analytics, innovation, and data-driven decision-making.

Hexaview Technologies offers data lake consulting services that empower businesses to efficiently manage and unlock the full potential of their data. With the increasing role of data in shaping strategic decisions, Hexaview Technologies tailored approach to building, optimizing, and maintaining data lakes ensures organizations can harness the power of big data for sustained growth and innovation.

Leveraging Hexaview's enterprise data lake consulting services can ensure that your data lake solution is optimized for your specific business needs, enabling you to transform raw data into actionable insights. Whether you’re looking to streamline data management, enhance analytics capabilities, or scale your infrastructure, Hexaview Technologies is here to guide you every step of the way.

%201.svg)

%201.svg)

%201.svg)

Helping regulated enterprises modernize systems, adopt AI-first engineering, and deliver outcomes that pass audits the first time.